How to write a test strategy

I’ve documented my overall approach to rapid, lightweight test strategy before but thought it might be helpful to post an example. If you haven’t read the original post above, see that first.

This is the a sanitised version of the first I ever did, and while there are some concessions to enterprise concerns, it mostly holds up as a useful example of how a strategy might look. I’m not defending this as a good strategy, but I think this worked as a good document of an agreed approach.

Project X Test Strategy

Purpose

The purpose of this document is –

- To ensure that testing is in line with the business objectives of Company.

- To ensure that testing is addressing critical business risks.

- To ensure that tradeoffs made during testing accurately reflect business priorities

- To provide a framework that allows testers to report test issues in a pertinent and timely manner.

- To provide guidance for test-related decisions.

- To define the overall test strategy for testing in accordance with business priorities and agreed business risks.

- To define test responsibilities and scope.

- To communicate understanding of the above to the project team and business.

Background

Project X release 2 adds reporting for several new products, and a new report format.

The current plan is to add metric capture for the new products, but not generate reports from this data until the new reports are ready. Irrespective of whether the complete Project X implementation is put into production, the affected products must still be capturing Usage information.

Key Features

- Generation of event messages by the new products (Adamantium, DBC, Product C, Product A and Product B.

- New database schema for event capture and reporting

- New format reports with new fields for added products

- Change of existing events to work with the new database schema

Key Dates

- UAT – Mon 26/03/07 to Tue 10/04/07

- Performance/Load – Wed 28/03/07 to Wed 11/04/07

- Production – Thu 12/04/07

Key Risks

| Risk | Impact | Mitigation Strategy | Risk area |

| The team’s domain knowledge of applications being modified is weak or incomplete for key products. | Impact of changes to existing products may be misjudged by the development team and products adversely affected. |

|

Project |

| Data architect being replaced. | Supporting information that is necessary for generating reports may not be captured. There may be some churn in technical details. |

|

Project |

| Strategy for maintaining version 1 and version 2 of the ZING database in production has not been defined. | Test strategy may not be appropriate. | None. | Project |

| Insufficient time to test all events and perform regression testing of existing products. | Events not captured, not captured correctly, or applications degraded in functionality or performance |

These two activities will free testers to focus on QTP regression scripts. |

Project |

| Technical specifications and mapping documents not ready prior to story development | Mappings may be incorrect. Test to requirement traceability difficult to retrofit. | Retrofit where time permits. Business to determine value of this activity. | Project |

| XML may not be correctly transformed | Incorrect data will be collected | Developers will use dBUnit to perform integration testing of XML to database mapping. This will minimise error in human inspection. | Product |

| Usage information may be lost. It is critical that enough information be captured to relate event information to customers, and that the information is correct. | No Usage information available. | Alternate mechanisms exist for capturing information for Product A and Product B. Product B needs to implement a solution. Regression testing needs to ensure that existing Product D events are unaffected. | Product |

| No robust and comprehensive automated regression test suite for PRODUCT D components. May not be time to develop a full suite of QTP tests for all events and field mappings. | PRODUCT D regressions introduced, or regression testing of Product D requires extra resourcing. | Will attempt to leverage PRODUCT D scripts from other projects and existing scripts, while extending the QTP suite. | |

| Project X changes affect performance of existing products | Downtime of Product D products and/or loss of business. | Performance testing needs to cover combined product tests and individual products compared to previous benchmark performance results | Parafunctional |

| Project X may affect products when under stress | Downtime of Product D products and/or loss of business. | Volume tests should simulate large tables, full disks and overloaded queues to see impact to application performance. | Parafunctional |

| Reliability tests may not have been performed previously. That is,

tests that all events were captured under load. (I need to confirm this) |

Usage information may be going missing | Performance testing should include some database checks to ensure all messages are being stored | Historical |

| Unable to integrate with the new reports prior to release of new event capturing. | Important data may not be collected or data may not be suitable for use in reports. |

|

Product |

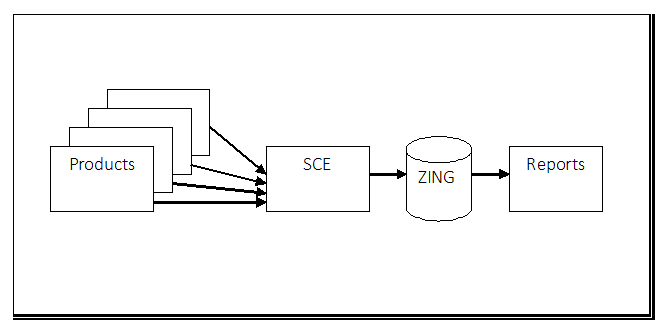

Project X Strategy Model

The diagram above defines the conceptual view of the components for testing. From this model, we understand the key interfaces that pertain to the test effort, and the responsibilities of different subsystems.

Products (Product D, Product A, Product C, Product B)

Capabilities

- Generate events

Responsibilities

- Event messages should be generated in response to the correct user actions.

- Event messages should contain the correct information

- Event message should generate well-formed XML

- Error handling?

SCE

Capabilities

- Receive events

- Pass events to the ZING database

Responsibilities

- Transform event XML to correct fields in ZING database for each event type

- Error handling?

Reports

Capabilities

- Transform raw event information into aggregate metrics

- Re-submit rejected events to ZING

- Generate reports

Responsibilities

- Correctly generate reports for event data which meets specifications

- Correct data and re-load into ZING.

Interfaces

Product to SCE

This interface will not be tested in isolation.

SCE to ZING

Developers will be writing dBUnit integration tests, which will take XML messages and verify that the values in the XML are mapped to the correct place in the ZING database.

ZING to Reports

The reporting component will not be available to test against, and domain expertise may not be as strong as for previously releases with the departure of senior personnel. Available domain experts will be involved as early as possible to validate the contents of the ZING database.

Product to ZING

System testing will primarily focus on driving the applications and ensuring that –

- Application’s function is unaffected

- Product generates events in response to correct user actions

- XML can be received by SCE

- Products send the correct data through

Key testing focus

- Ensuring existing event capture is unaffected (PRODUCT D).

- Ensuring event details correctly captured for systems. This is more critical for systems in which there is currently no alternative capture mechanism (Product C, Adamantium, DBC). Alternative event capture mechanisms exist for Product B and Product A.

- Ensuring existing system functionality is not affected. Responsibility for Product C’s regression testing will lie with Product C’s team. There is no change to the Product B application, but sociability testing may be required for log processing. Product A has an effective regression suite (selenium), so the critical focus is on testing of PRODUCT D functionality.

Test prioritisation strategy

These factors guide prioritisation of testing effort:

- What is the application’s visibility? (ie. Cost of failure)

- What is the application’s value? (ie. Revenue)

For the products in scope, cost of failure and application value are proportional.

There may be other strategic factors as presented by the business as we go, but the above are the primary drivers.

Priority of products –

- Adamantium/Product D

- Product C

- Product A

- Product B

- DBC

Within Product D, the monthly Usage statistics show the following –

- 97% of searches are business type or business name searches.

- 3% of searches are browse category searches

- Map based searches are less than 0.2% of searches

Test design strategy

Customer (Acceptance) Tests

For each event, test cases should address:

- Ensuring modified applications generate messages in all expected situations.

- Ensuring modified applications generate messages correctly (correct data and correct XML).

- Ensuring valid messages can be processed by SCE.

- Ensuring valid messages are transformed correctly go to the specified database fields.

- Ensuring data in the database is acceptable for reporting needs.

Regression Tests

For each product where event sending functionality is added:

- All other application functionality should be unchanged

Additionally, the performance test phase will measure the impact of modifications to each product.

Risk Factors

These tests correspond to the following failure modes –

- Events are not captured at all.

- Events are captured in a way which renders them unusable.

- Systems whose code is instrumented to allow sending of events to SCE are adversely affected in their functionality.

- Event data is mapped to field(s) incorrectly

- Performance is degraded

- Data is unsuitable for reporting purposes

Team Process

The development phase will consist of multiple iterations.

- At the beginning of each iteration, the planning meeting will schedule stories to be undertaken by the development team.

- The planning meeting will include representatives from the business, test and development teams.

- The goal of the planning meeting is to arrive at a shared understanding of scope for each story and acceptance criteria and record that understanding via acceptance tests in JIRA.

- Collaboration through the iteration to ensure that stories are tested to address the business needs (as defined by business representatives and specifications) and risks (as defined by business representatives and agreed to in this document). This may include testing by business representatives, system testers and developers.

- The status of each story will be recorded in JIRA.

When development iterations have delivered the functionality agreed to by the business, deployment to environments for UAT and Performance and Load testing will take place.

Deliverables

High priority

- QTP regression suite for PROJECT X events (including Adamantium, DBC) related to business type and business name searches

- Test summary report prior to go/no go meeting

Secondary priority

- QTP regression suite for Product A (Lower volume, fewer events and the application already collects metrics). Manual scripts and database queries will be provided in lieu of this.

Other

- Product C should create PROJECT X QTP regression tests as part of their development work

- Product B test suite will likely not be a QTP script as log files are being parsed as a batch process. GUI regression scripts will be suitable when Product B code is instrumented to add event generation. If time permits, we will attempt to develop a tool to parse a log file and confirm that the correct events were generated.

To do

- Confirm strategy with stakeholders

- Confirm test scope with Product C testers

- Confirm events that are in scope for this release

- Define scope of Product D testing and obtain Product D App. Sustain team testers for regression testing.

One comment on “How to write a test strategy”